Explore models in AI Toolkit

AI Toolkit provides comprehensive support for a wide variety of generative AI models, including both Small Language Models (SLMs) and Large Language Models (LLMs).

Within the model catalog, you can explore and utilize models from multiple hosting sources:

- Models hosted on GitHub, such as Llama3, Phi-3, and Mistral, including pay-as-you-go options.

- Models provided directly by publishers, including OpenAI's ChatGPT, Anthropic's Claude, and Google's Gemini.

- Models hosted on Microsoft Foundry.

- Models downloaded locally from repositories like Ollama and ONNX.

- Custom self-hosted or externally deployed models accessible via Bring-Your-Own-Model (BYOM) integration.

Deploy models directly to Foundry from within the model catalog, streamlining your workflow.

Use Microsoft Foundry, Foundry Local, and GitHub models added to AI Toolkit with GitHub Copilot. For more information, check out Changing the model for chat conversations.

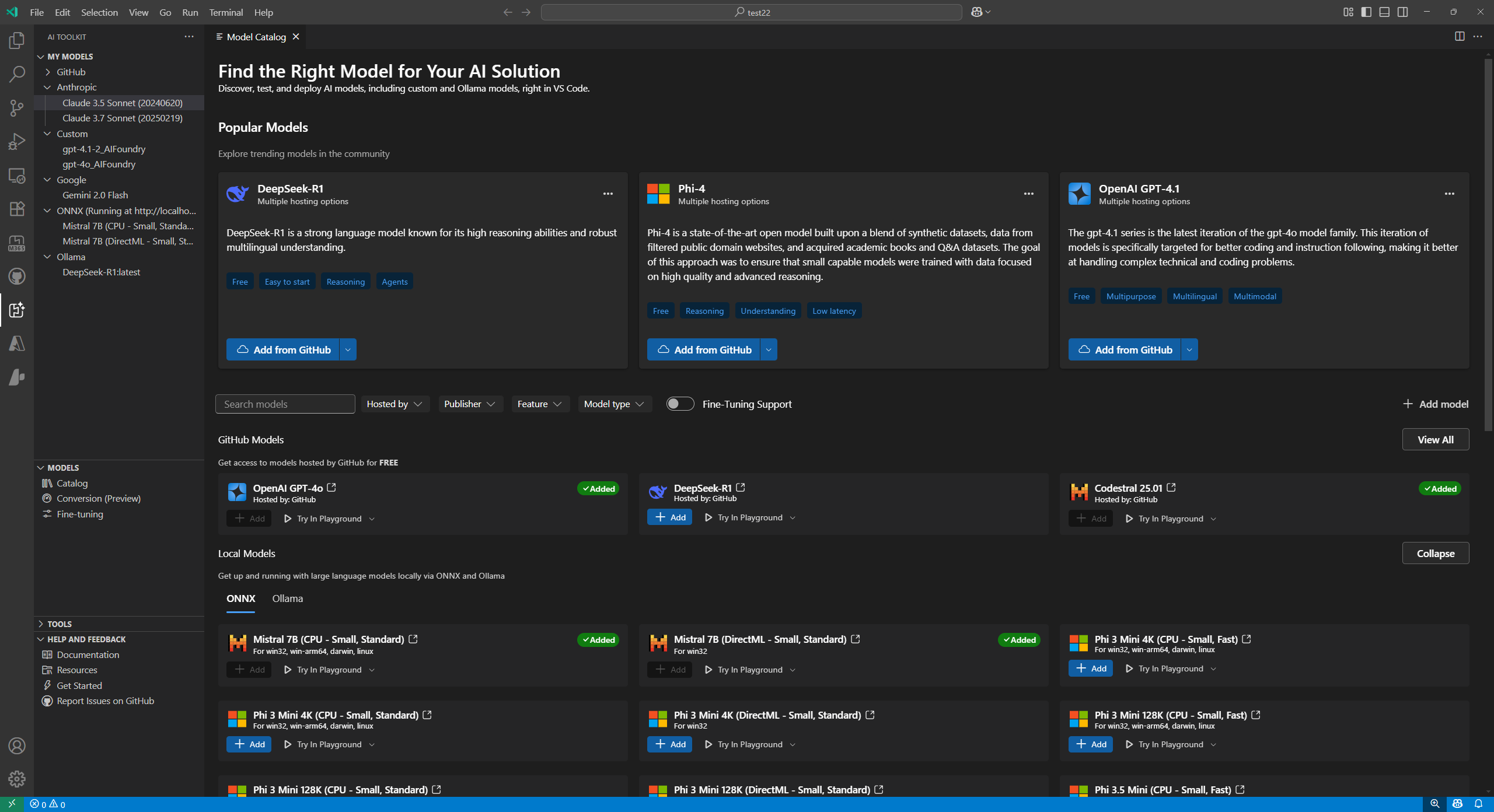

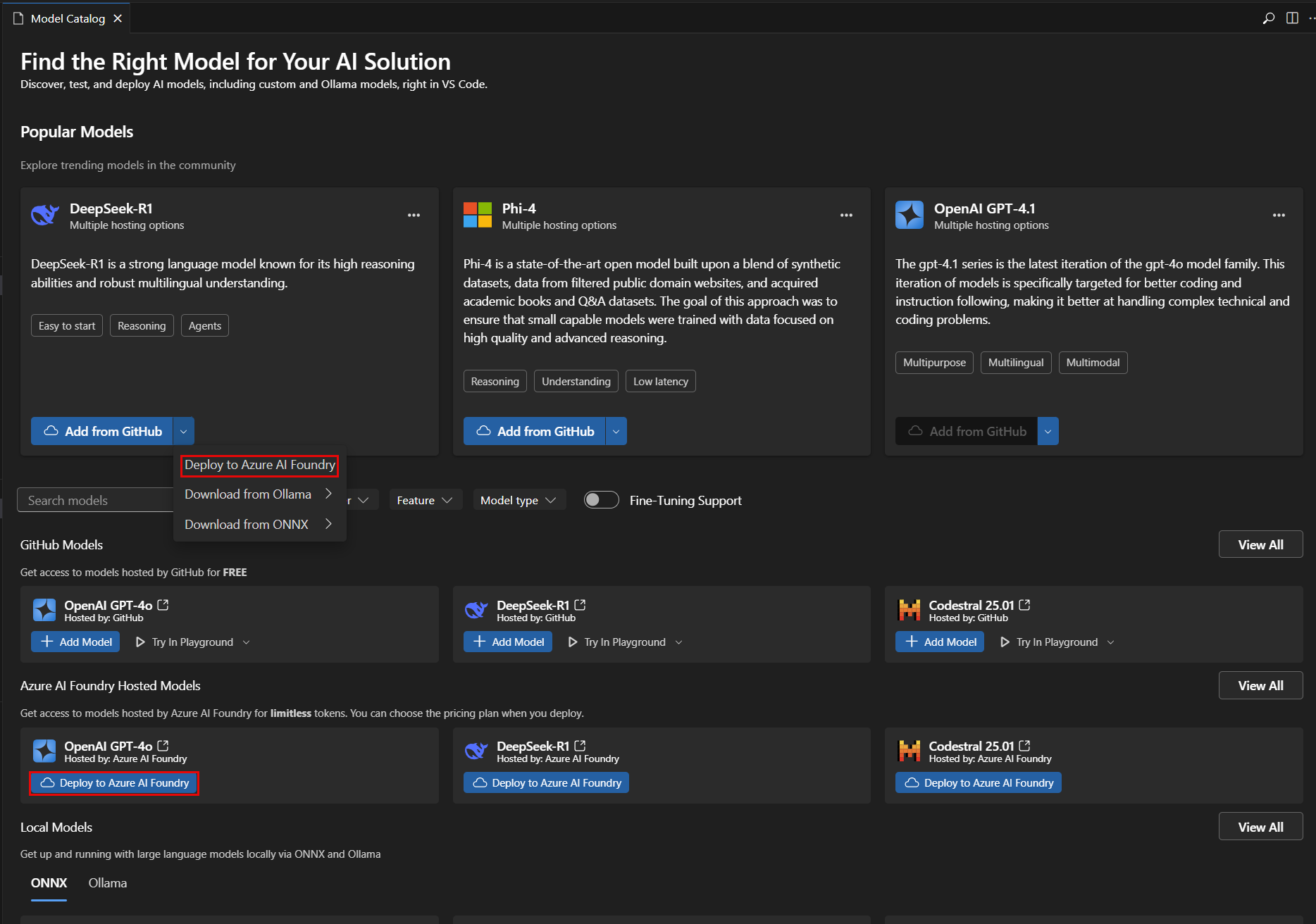

Find a model

To find a model in the model catalog:

-

Select the AI Toolkit view in the Activity Bar

-

Select MODELS > Catalog to open the model catalog

-

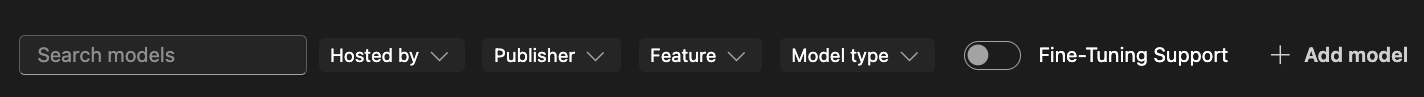

Use the filters to reduce the list of available models

- Hosted by: AI Toolkit supports GitHub, ONNX, OpenAI, Anthropic, Google as model hosting sources.

- Publisher: The publisher for AI models, such as Microsoft, Meta, Google, OpenAI, Anthropic, Mistral AI, and more.

- Feature: Supported features of the model, such as

Text Attachment,Image Attachment,Web Search,Structured Outputs, and more. - Model type: Filter models that can run remotely or locally on CPU, GPU, or NPU. This filter depends on the local availability.

- Fine-tuning Support: Show models that can be used to run fine-tuning.

-

Browse the models in different categories, such as:

- Popular Models is a curated list of widely used models across various tasks and domains.

- GitHub Models provide easy access to popular models hosted on GitHub. It's best for fast prototyping and experimentation.

- ONNX Models are optimized for local execution and can run on CPU, GPU, or NPU.

- Ollama Models are popular models that can run locally with Ollama, supporting CPU via GGUF quantization.

-

Alternatively, use the search box to find a specific model by name or description

Add a model from the catalog

To add a model from the model catalog:

-

Locate the model you want to add in the model catalog.

-

Select the Add on the model card

-

The flow for adding models will be slightly different based on the providers:

-

GitHub: AI Toolkit asks for your GitHub credentials to access the model repository. Once authenticated, the model is added directly into AI Toolkit.

NoteAI Toolkit now supports GitHub pay-as-you-go models, so you can keep working after passing free tier limits.

-

ONNX: The model is downloaded from ONNX and added to AI Toolkit.

-

Ollama: The model is downloaded from Ollama and added to AI Toolkit.

TipYou can edit the API key later by right-clicking the model and selecting Edit and view the encrypted value in

${HOME}/.aikt/models/my-models/ymlfile.

-

OpenAI, Anthropic, and Google: AI Toolkit prompts you to enter the API Key.

-

Custom models: Refer to the Add a custom model section for detailed instructions.

-

Once added, the model appears under MY MODELS in the tree view, and you can use it in the Playground or Agent Builder.

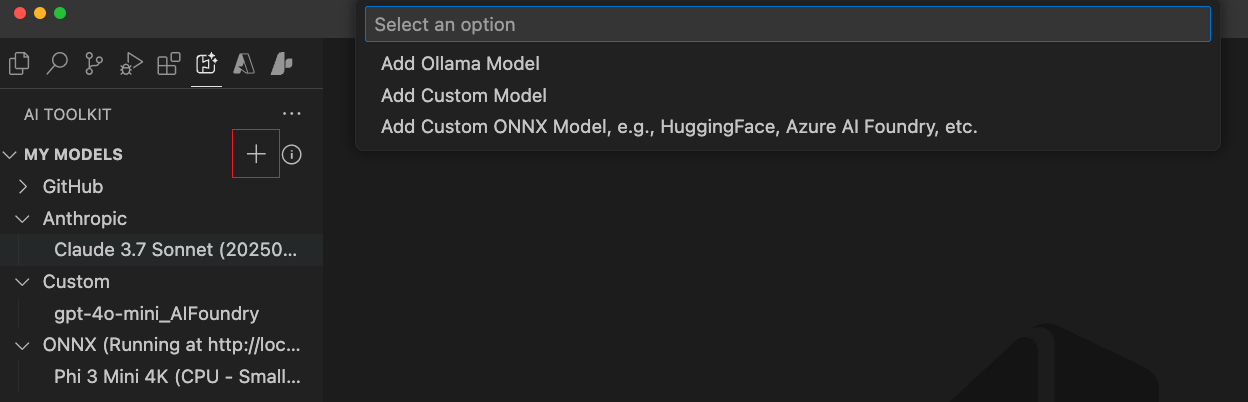

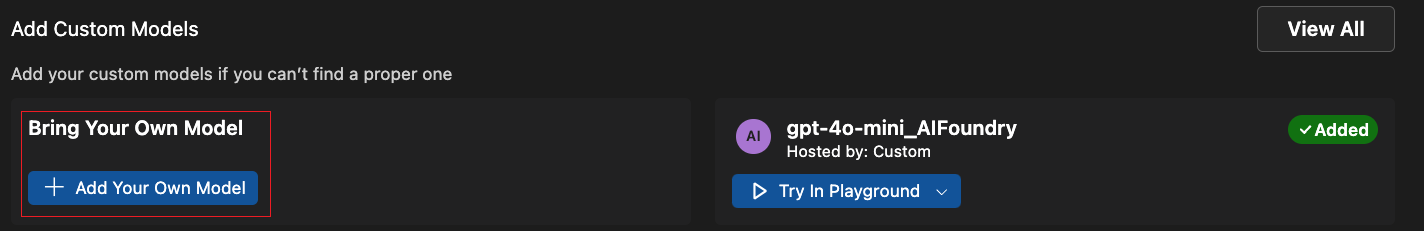

Add a custom model

You can also add your own models that are hosted externally or run locally. There are several options available:

- Add Ollama models from the Ollama library or custom Ollama endpoints.

- Add custom models that have an OpenAI compatible endpoint, such as a self-hosted model or a model running on a cloud service.

- Add custom ONNX models, such as those from Hugging Face, using AI Toolkit's model conversion tool.

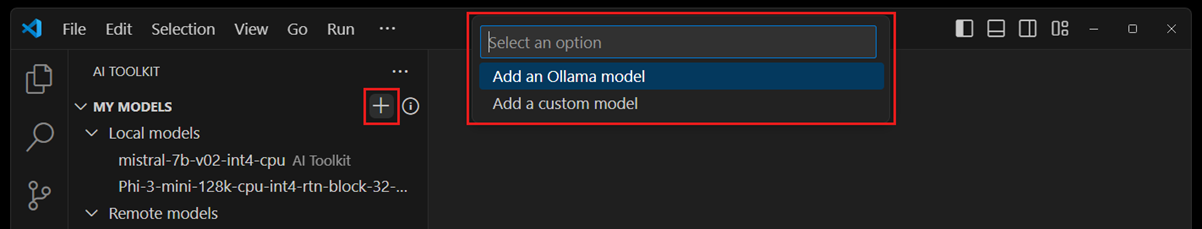

There are several entrypoints to add models to AI Toolkit:

-

From MY MODELS in the tree view, hover over it and select the

+icon.

-

From the Model Catalog, select the + Add model button from the tool bar.

-

From the Add Custom Models section in the model catalog, select + Add Your Own Model.

Add Ollama models

Ollama enables many popular genAI models to run locally with CPU via GGUF quantization. If you have Ollama installed on your local machine with downloaded Ollama models, you can add them to AI Toolkit for use in the model playground.

Prerequisites for using Ollama models in AI Toolkit:

- AI Toolkit v0.6.2 or newer.

- Ollama (Tested on Ollama v0.4.1)

To add local Ollama into AI Toolkit

-

From one of the entrypoints mentioned above, select Add Ollama Model.

-

Next, select Select models from Ollama library

If you start the Ollama runtime at a different endpoint, choose Provide custom Ollama endpoint to specify an Ollama endpoint.

-

Select the models you want to add to AI Toolkit, and then select OK

NoteAI Toolkit only shows models that are already downloaded in Ollama and not yet added to AI Toolkit. To download a model from Ollama, you can run

ollama pull <model-name>. To see the list of models supported by Ollama, see the Ollama library or refer to the Ollama documentation. -

You should now see the selected Ollama model(s) in the list of models in the tree view.

NoteAttachment is not support yet for Ollama models. Since we connect to Ollama using its OpenAI compatible endpoint and it doesn't support attachments yet.

Add a custom model with OpenAI compatible endpoint

If you have a self-hosted or deployed model that is accessible from the internet with an OpenAI compatible endpoint, you can add it to AI Toolkit and use it in the playground.

- From one of the entry points above, select Add Custom Model.

- Enter the OpenAI compatible endpoint URL and the required information.

To add a self-hosted or locally running Ollama model:

- Select + Add model in the model catalog.

- In the model Quick Pick, choose Ollama or Custom model.

- Enter the required details to add the model.

Add a custom ONNX model

To add a custom ONNX model, first convert it to the AI Toolkit model format using the model conversion tool. After conversion, add the model to AI Toolkit.

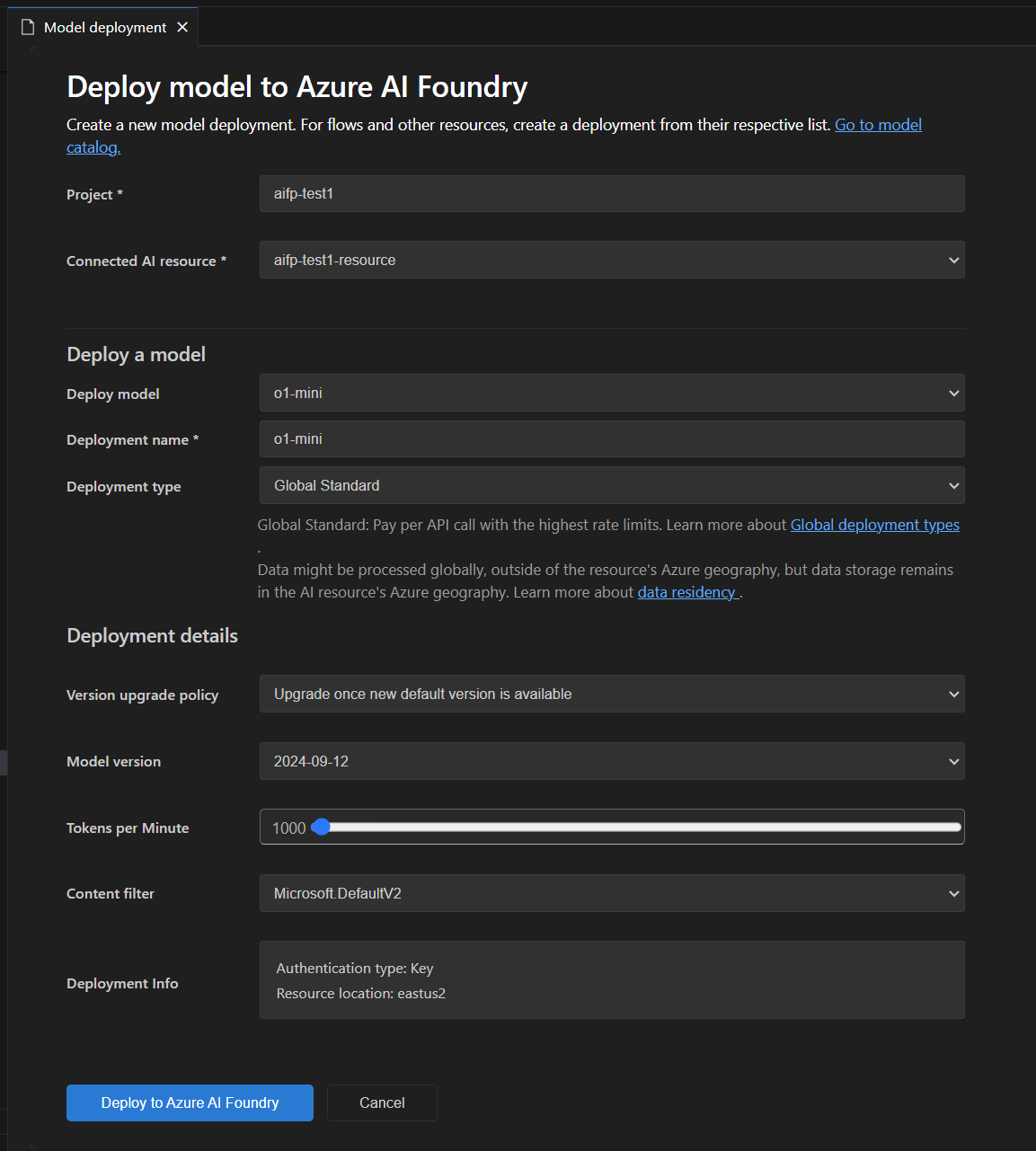

Deploy a model to Microsoft Foundry

You can deploy a model to Microsoft Foundry directly from the AI Toolkit. This allows you to run the model in the cloud and access it via an endpoint.

-

From the model catalog, select the model you want to deploy.

-

Select Deploy to Microsoft Foundry, either from the dropdown menu or directly from the Deploy to Microsoft Foundry button, as in the following screenshot:

-

In the model deployment tab, enter the required information, such as the model name, description, and any additional settings, as in the following screenshot:

-

Select Deploy to Microsoft Foundry to start the deployment process.

-

A dialog will appear to confirm the deployment. Review the details and select Deploy to proceed.

-

Once the deployment is complete, the model will be available in the MY MODELS section of AI Toolkit, and you can use it in the playground or agent builder.

Select a model for testing

You can test a model in the playground for chat completions.

Use the actions on the model card in the model catalog:

- Try in Playground: Load the selected model for testing in the Playground.

- Try in Agent Builder: Load the selected model in the Agent Builder to build AI agents.

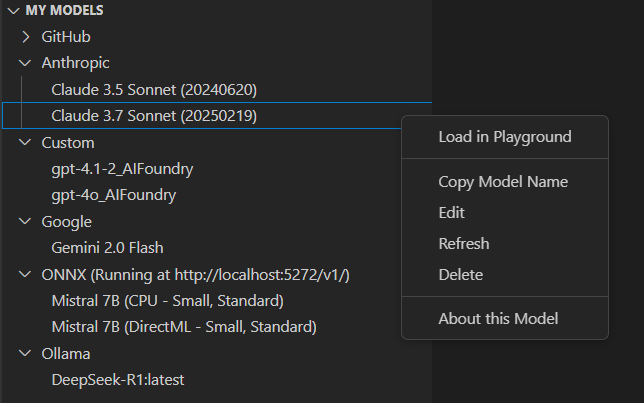

Manage models

You can manage your models in the MY MODELS section of the AI Toolkit view. Here you can:

-

View the list of models you have added to AI Toolkit.

-

Right-click on a model to access options such as:

- Load in Playground: Load the model in the Playground for testing.

- Copy Model Name: Copy the model name to the clipboard for use in other contexts, such as your code integration.

- Refresh: Refresh the model configuration to ensure you have the latest settings.

- Edit: Modify the model settings, such as the API key or endpoint.

- Delete: Remove the model from AI Toolkit.

- About this Model: View detailed information about the model, including its publisher, source, and supported features.

-

Right-click on

ONNXsection title to access options such as:- Start Server: Start the ONNX server to run ONNX models locally.

- Stop Server: Stop the ONNX server if it is running.

- Copy Endpoint: Copy the ONNX server endpoint to the clipboard for use in other contexts, such as your code integration.

License and sign-in

Some models require a publisher or hosting-service license and account to sign-in. In that case, before you can run the model in the model playground, you are prompted to provide this information.

What you learned

In this article, you learned how to:

- Explore and manage generative AI models in AI Toolkit.

- Find models from various sources, including GitHub, ONNX, OpenAI, Anthropic, Google, Ollama, and custom endpoints.

- Add models to your toolkit and deploy them to Microsoft Foundry.

- Add custom models, including Ollama and OpenAI compatible models, and test them in the playground or agent builder.

- Use the model catalog to view available models and select the best fit for your AI application needs.

- Use filters and search to find models quickly.

- Browse models by category, such as Popular, GitHub, ONNX, and Ollama.

- Convert and add custom ONNX models using the model conversion tool.

- Manage models in MY MODELS, including editing, deleting, refreshing, and viewing details.

- Start and stop the ONNX server and copy endpoints for local models.

- Handle license and sign-in requirements for some models before testing them.